Has anyone come up with a solution for this as I still have the same problem?

2 comments up

I’ve closed all of the open ports on the router, now the network is safe. But still, I have reinstalled the OS with hive-replace and after that selfupgrade doesn’t work.

Flash the drive from your pc via balena/Rufus, and try again, preferably on a different network than you’re having issues with at least to test (do you have 4g or lte to test with if not a seperate network?)

So you are telling me that having used hive-replace completely ruined the os on the rig?

Before trying to use it the rig was completely fine, with no issues.

Now the rig is in a completely different country, thousands of km away.

So the answer is: “Do not use hive-replace because it can easily ruin your rig”.

Ok, got it.

No, the answer is rule out any variables that sounds like a bad actor on your network is exploiting.

But the rig was perfectly fine before hive-replace

Could be a failing/faulty drive, or corrupt install. Won’t know without troubleshooting. Flash the latests stable image on a new drive and see if it solves the issue.

I’ve finally had the opportunity to physically access the machine. I flashed HiveOS on a pendrive, I removed all other drives from the machine and I booted up the OS just using the pendrive.

After logging in with the same rig ID and password of the rig, I tried to launch the selfupgrade command but it failed again.

I installed the 0.6-217@220422 version, and I have Kernel version 5.10.0-hiveos #110.

So now, what is the problem?

UPDATE:

I also noticed that both with the on-drive installation that with the on-pendrive installation, after about 3 hours of mining, the card still continue to run and hash, but the pool stats instead tell that the miner stops hashing.

Every time I restart the miner, after about 3 hours, I am not mining anymore.

So, there is a sign of corruption. But the problem is that i was expecting to solve it by starting the rig with a fresh installation flashed on the pendrive. Instead, I have the exact same issue I hade with the OS installed on the SSD.

So everything looks normal, except the pool doesn’t show stats after some time? Have you opened the miner in the shell to see what address it was mining to and or if it was frozen etc?

Everything “looks” normal except the fact that the selfupgrade doesn’t work, as the thread is saying.

And the pool shows the stats, but for the first 3 hours of mining. After that, the HiveOS UI still tells that the rig is mining, but the Pool stats says that the miner is inactive.

If you have direct access to the rig, check the miner screen to confirm it is mining and to the pool you intend to be mining with:

If your rig is online, you can tell the miner to restart and confirm it is your wallet…or not. This is also possible from the shell options.

Are you launching “selfupgrade” from the shell so you can see precisely where it is failing?

Did you read all the above messages of this thread?

I have the exact same issue of the thread owner. And the answer of your question is in the above replies.

Also, I already said that even if I restart the entire rig after about 3h the gpus continue to mine, the power consumption and temperature remain the same, the miner log write the proper hash rate, but the pool stats just record the first 3h of mining and then it doesn’t see the rig anymore, until i reboot it and then it just receive again the first 3 hours of shares.

I did read it all.

Your “3 hour symptoms” are not the same.

You have not posted information on your pool choice, miner choice, or if you have persistent miner logs enabled.

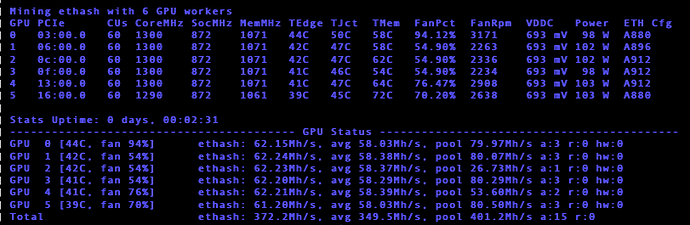

In fact, you have yet to post a visual from the hiveos.farm worker interface or the pool stats.

Not sure how anyone can assist you with such a lack of information.

You don’t find information about my “3 hour issue” because this thread is about the selfupgrade command that doesn’t work.

I added the information about the 3 hour issue just to let all know that my installation could be in fact corrupted.

Anyway, the main issue here is that the selfupgrade command doesn’t work for me even after running HiveOS from a fresh new installation from a pendrive.

Anybody knows if the fact that I used the same rig ID could let me bring the issue i had before into the new fresh installation? Otherwise I don’t know how is possible that even a new fresh installation can have the same issue as the one installed in the SSD

This is a potentially worse than corruption, but you have provided no data.

Many potential reasons, yes, a bad install, hacking, network issues, and the list goes on. Yet, you have not provided a screen shot of the failure from the shell.

rig.conf or rig id entered with first run process, no. Malware on the station used for flashing, maybe.

Unfortunately, USB drives have their own issues.

Does the hive-replace -y --stable command work when entered via the shell interface?

As I told you before, all the information about the selfupgrade has been already shared in the previous replies.

Here is where the screenshot of the shell was posted. Even if I’m not the one who posted it, i have the same result.

I don’t think that the the install or the computer used to flash the image can be the issue, because the issues that I’m seeing now after the new installation are exactly the same as before. The reason why i decided to do a fresh new installation was exactly this two issues: the selfupgrade command and the 3hour max mining. So i decided to do a new installation to solve them.

Another information is that both the two problems started after executing the hive-replace command in April. Once the process finished the two issues started and never stopped. Now not even after a new installation.

Command Not Found “result” has more than 1 cause. And in that posters image, not yours, there are clearly install errors.

Did yours have the exact same install errors? If so, attempt the below:

If hive-replace -y --stable command runs properly on YOUR rig now, that would be a significant detail in the troubleshooting. Of course, if successful, it will re image the target media. Which you would then want to immediately lock down securely, disable VNC, change passwords, etc.

If your rig provides you a command not found error for hive-replace, your rig is exhibiting classic direct compromise from outside parties.

Let’s confirm or deny that worst case scenario.

I tried so many times the hive-replace command before deciding to do a fresh install.

Do you think that running it now from the USB pendrive can have a different result than before when I was on the SSD installation?

When I tried it with the SSD installation, the hive-replace command always completed successfully, but after the new boot the 2 issue were always there.

Anyway, if you really want to see my shell output I can proved it, but is exactly the same as the one above (I’m talking about the shell output, not the entire screenshot).

It is a good sign the hive-replace works

Humbly, I’d suggest working with your original SSD as it will be the long term platform and it would support persistent logging to track that “3 hr” issue.

I’d expect we are chasing at least (2) issues.