Update:

Thanks to the guidance, I ripped everything down and built back up slowly and it dawned upon me to read up on the motherboard I have and found this in particular which provided some understanding.

y The PCI_E4 slot will be unavailable when an M.2 SSD module has been installed in

the M.2_2 slot.

y The PCI_E2 slot will be unavailable when an expansion card has been installed in

the PCI_E5 slot.

y The PCI_E3 slot will be unavailable when an expansion card has been installed in

the PCI_E6 slot.

y If you install a lar

This showed me that even though I have 6 slots does not necessarily mean that I have 6 slots available simultaneously. So I double-checked my setup to ensure I was not blocking the slots that I was using. During this “re-configuration”, I noticed that the two longer PCI slots (x16) had a light under them when they are active. And the first one was not active. Not sure why the stubs would not work in that slot. Then I noticed that it really has to be secure for that light to indicate that it’s active. Since that slot was finicky I moved things around.

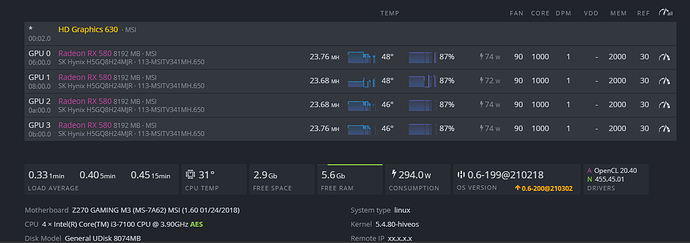

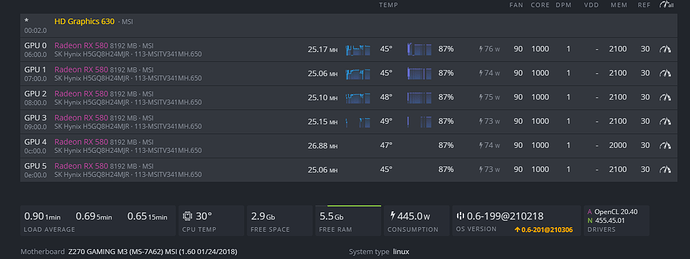

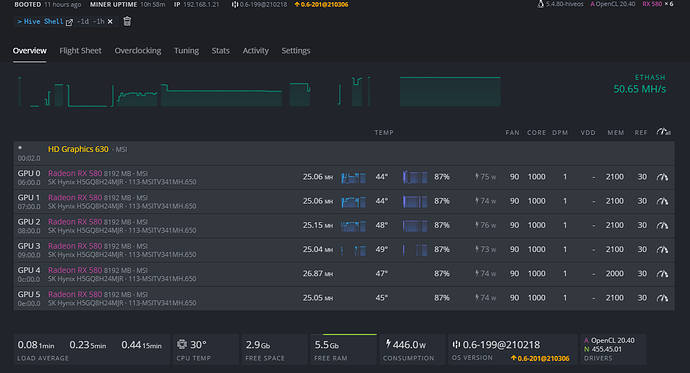

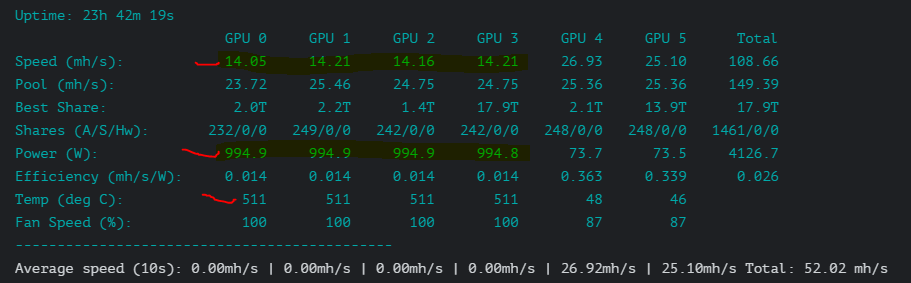

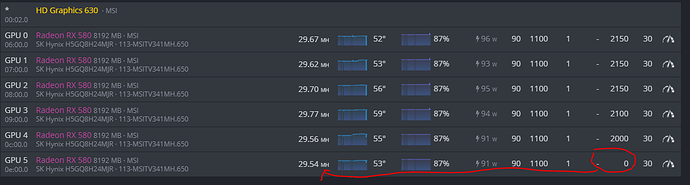

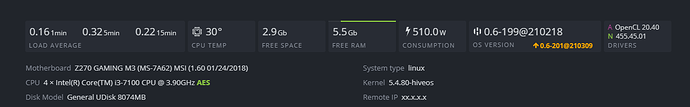

I then ran into some posts that mentioned changing the latency and generation PCI bios configuration. This configuration did seem to impact things. I found that keeping the generation to “auto” worked best for me. Moving it to 1,2 just kept things unstable. The next was the latency. I started from the bottom and moved up until I got the consistent boot with all the GPUs active and seen by Hive and the miner. This setting is currently working at around 128+ cycles with 4 GPUs at the moment. Two of the GPUs are on a 4 USB expansion PCIe expansion card due to the slot limitation.

I would connect the final two GPUs, but my USB cables are too short based on my configuration. So I have to figure that out or get longer USB cables. From what understand they should be USB 3.0 and not beyond a certain length?

Until then, I will let things run for a day two before mucking around with the overclocking and/or flashing the bios I have on them. Any comments/suggestions will be appreciated.

Thank you for the feedback thus far.